Instructors and faculty are exploring and experimenting with generative AI in their teaching and research as these tools have become widely available and embedded in many everyday technologies. This site provides guidance, resources, and opportunities to learn more about how AI can be used in teaching and learning. The site will be continuously updated as more resources are made available and as the use of generative AI tools grows and evolves.

In March, 2024, the Academic Policy Committee struck a cross-institutional sub-committee that was tasked with providing guidance to faculty, staff, and students on the use of generative AI in teaching and learning. The Committee is continuing to research and learn about these emerging tools, and provide guidance as questions arise. The information provided here is informed by University of Windsor policies and procedures, and the growing body of information from other institutions as we all grapple with the challenges and opportunities that generative AI offers.

Download a one-page version of these guidelines in PDF format.

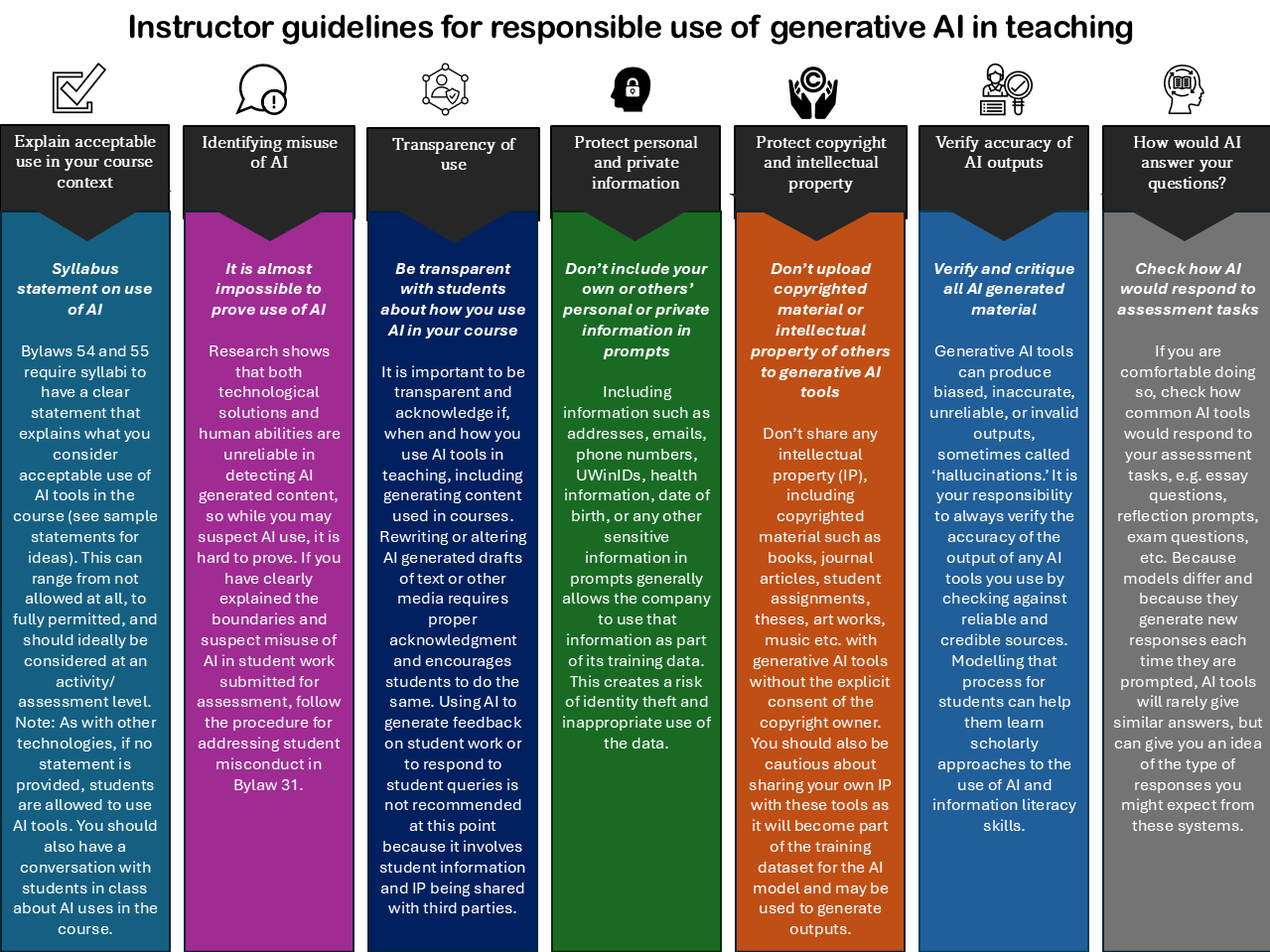

Key advice for faculty on the responsible use of generative AI in teaching includes:

Explain acceptable use in your course context

Syllabus statement on use of AI

Bylaws 54 and 55 require syllabi to have a clear statement that explains what you consider acceptable use of AI tools in the course (see sample statements for ideas). This can range from not allowed at all, to fully permitted, and should ideally be considered at an activity/ assessment level. Note: As with other technologies, if no statement to the contrary is included in the syllabus, students are allowed to use AI tools. You should also have a conversation with students in class about AI uses in the course. These example slides provide a starting point for talking with students about generative AI.

Identifying misuse of AI

It is almost impossible to prove use of AI

Research shows that both technological solutions and human abilities are unreliable in detecting AI generated content, so while you may suspect AI use, it is hard to prove. If you have clearly explained the boundaries and suspect misuse of AI in student work submitted for assessment, follow the procedure for addressing student misconduct in Bylaw 31.

Transparency of use

Be transparent with students about how you use AI in your course

It is important to be transparent and acknowledge if, when and how you use AI tools in teaching, including generating content used in courses. Rewriting or altering AI generated drafts of text or other media requires proper acknowledgment and encourages students to do the same. Using AI to generate feedback on student work or to respond to student queries is not recommended at this point because it involves student information and IP being shared with third parties.

Protect personal and private information

Don’t include your own or others’ personal or private information in prompts

Including information such as addresses, emails, phone numbers, UWinIDs, health information, date of birth, or any other sensitive information in prompts generally allows the company to use that information as part of its training data. This creates a risk of identity theft and inappropriate use of the data.

Protect copyright and intellectual property

Don’t upload copyrighted material or intellectual property of others to generative AI tools

Don’t share any intellectual property (IP), including copyrighted material such as books, journal articles, student assignments, theses, art works, music etc. with generative AI tools without the explicit consent of the copyright owner. You should also be cautious about sharing your own IP with these tools as it will become part of the training dataset for the AI model and may be used to generate outputs.

Verify accuracy of AI outputs

Verify and critique all AI generated material

Generative AI tools can produce biased, inaccurate, unreliable, or invalid outputs, sometimes called ‘hallucinations.’ It is your responsibility to always verify the accuracy of the output of any AI tools you use by checking against reliable and credible sources. Modelling that process for students can help them learn scholarly approaches to the use of AI and information literacy skills.

How would AI answer your questions?

Check how AI would respond to assessment tasks

If you are comfortable doing so, check how common AI tools would respond to your assessment tasks, e.g. essay questions, reflection prompts, exam questions, etc. Because models differ and because they generate new responses each time they are prompted, AI tools will rarely give similar answers, but can give you an idea of the type of responses you might expect from these systems.

Additional considerations for instructors

- It is important to note that the University’s policies and by-laws allow students to use technologies to support their learning and academic activities unless those are specifically prohibited for a sound pedagogical reason.

- In keeping with this approach, as of Fall 2024, unless faculty have a clear statement to the contrary in their syllabus, the use of Generative AI tools for academic work is permitted.

- Use of generative AI tools in ways other than those permitted in the syllabus may be considered a breach of academic integrity under the Student Code of Conduct and the procedures in Bylaw 31 would then be followed.

- AI detection tools are currently unreliable and should not be used as evidence of academic integrity breaches. Similarly, general AI tools such as ChatGPT or Copilot are not able to identify whether student work was generated by AI and should not be used in this way.

- If you suspect that a student has inappropriately used generative AI, follow the Academic Integrity procedure in Bylaw 31. This conversational guide may be useful in starting a conversation with students about their use of AI.

- Students and faculty who use generative AI in their academic work should acknowledge its use. While the legal implications of AI for intellectual property are still unclear and yet to be resolved in court, the current consensus is that AI cannot be a co-author or author of a work, and the person using the AI tool must take responsibility for any outputs that come from its use.

- McMaster University Library has created a helpful LibGuide on citing and acknowledging the use of generative AI in academic work.

- Generative AI tools are not reliably able to apply grading criteria to assessment and should not be used to provide grades (letter or numeric) for student work. Using generative AI tools to provide feedback on student work may be acceptable under some conditions, including:

- Student work should not be shared with third-party applications that have not undergone a privacy and risk assessment and been endorsed by the University.

- Any use of generative AI tools for feedback on student work should be explicitly explained in the course syllabus. For example, a publisher may provide an AI tool that automatically provides feedback on student homework, and students should be made aware that this feedback is not from the instructor.

- Students should have the ability to opt-out of receiving AI generated feedback.

- Instructors and GAs/TAs are responsible for the appropriateness and accuracy of all feedback provided to students, regardless of how it is generated. AI generated feedback must be checked for accuracy and bias before being returned to the student.

Helpful resources

Slides to help start conversations with your students about AI

Download guiding questions to ask your students if you suspect academic misconduct in Word format or PDF format