We know that students come to university to learn and develop, and students who graduate from the University today will be entering a world where generative AI assistance is the norm, embedded in so many aspects of our lives. We also know that students want to learn how to, and are using generative AI tools in responsible, ethical, safe, and nuanced ways.

Generative AI tools such as ChatGPT, Microsoft Copilot and the myriad other tools that have been developed since November 2022 have both great potential, and carry multiple risks that students should be aware of as they navigate use of these technologies.

This page collects together the most current guidance from the University on how to appropriately use generative AI tools as a UWindsor student.

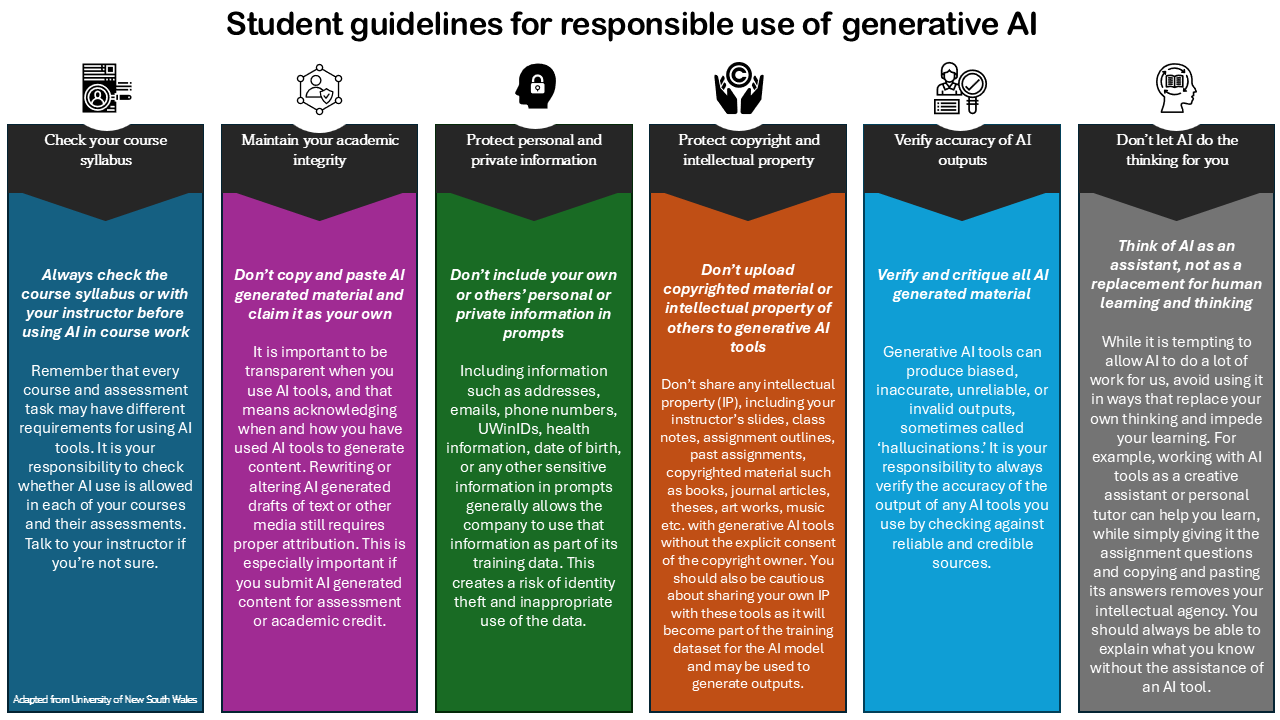

Six tips for using generative AI as a student

Let’s start with some tips that can help you use AI appropriately as a student.

1. Check your course syllabus

Always check the course syllabus or with your instructor before using AI in course work

Remember that every course and assessment task may have different requirements for using AI tools. It is your responsibility to check whether AI use is allowed in each of your courses and their assessments. Talk to your instructor if you’re not sure.

2. Maintain your academic integrity

Don’t copy and paste AI generated material and claim it as your own

It is important to be transparent when you use AI tools, and that means acknowledging when and how you have used AI tools to generate content. Rewriting or altering AI generated drafts of text or other media still requires proper attribution. This is especially important if you submit AI generated content for assessment or academic credit.

3. Protect personal and private information

Don’t include your own or others’ personal or private information in prompts

Including information such as addresses, emails, phone numbers, UWinIDs, health information, date of birth, or any other sensitive information in prompts generally allows the company to use that information as part of its training data. This creates a risk of identity theft and inappropriate use of the data. At present, no generative AI tools have undergone a complete privacy and risk assessment at UWindsor.

4. Protect copyright and intellectual property

Don’t upload copyrighted material or intellectual property of others to generative AI tools

Don’t share any intellectual property (IP), including your instructor’s slides, class notes, assignment outlines, past assignments, copyrighted material such as books, journal articles, theses, art works, music etc. with generative AI tools without the explicit consent of the copyright owner. You should also be cautious about sharing your own IP with these tools as it will become part of the training dataset for the AI model and may be used to generate outputs. At present, there are a number of ongoing lawsuits attempting to clarify the copyright implications of both the way in which publicly available data is used to train the models without explicit consent of the copyright holder, and the copyright status of content created by AI tools.

5. Verify accuracy of AI outputs

Verify and critique all AI generated material

Generative AI tools can produce biased, inaccurate, unreliable, or invalid outputs, sometimes called ‘hallucinations.’ It is your responsibility to always verify the accuracy of the output of any AI tools you use by checking against reliable and credible sources.

6. Don’t let AI do the thinking for you

Think of AI as an assistant, not as a replacement for human learning and thinking

While it is tempting to allow AI to do a lot of work for us, avoid using it in ways that replace your own thinking and impede your learning. For example, working with AI tools as a creative assistant or personal tutor can help you learn, while simply giving it the assignment questions and copying and pasting its answers removes your intellectual agency. You should always be able to explain what you know without the assistance of an AI tool.

Other important things to be aware of when using generative AI

AI syllabus statement

Starting in Fall 2024, instructors are required to include a statement in their course syllabi that clearly outlines acceptable and unacceptable uses of generative AI in their courses. As for other technologies, unless the syllabus says you can’t use generative AI you are allowed to use these technologies for course work.

There may be very sound pedagogical reasons why using AI may not be appropriate in some tasks. Your instructors will likely have very different expectations for the use of AI in their courses – ranging from no restrictions, to not allowing any use of AI. It is your responsibility to make sure you understand your instructor’s requirements before you start using AI tools for any of your assignments.

Biases

Generative AI tools are trained on large amounts of data from the internet, ranging from relatively reliable sources, to crowd-sourced information with lower reliability. As such, the models reflect the societal biases (gender, culture, language, and other biases) of those data sets. While AI companies are continuing to improve models and approaches to reduce bias and prevent dangerous or discriminatory output, it is very difficult to eliminate them and attempts to do so can have other unintended outcomes. It is important for you to be aware of the potential for perpetuating and reinforcing system inequality and bias by overreliance on AI tools. Always verify any information provided to you by an AI tool!

Environmental considerations

Generative AI tools require a lot of resources to train the models, and run the tools. It is very difficult to accurately estimate the amount energy and other resources used by AI tools, especially as at the same time they are becoming more efficient the sheer number of AI tools available inevitably increases the overall demand for energy. There is considerable evidence emerging to show that the impact of AI is substantially increasing energy and resource usage globally. While some major AI companies, including Google and Microsoft, are working towards carbon neutrality for their tools, there is still a way to go. As for other limited resources like water, we should consider using AI only when it is necessary and for the minimum amount needed to achieve a goal.

Ethical considerations

Our understanding of the ethical considerations for the use of generative AI are still evolving. There are ethical concerns about biases, dangerous or inaccurate outputs, environmental damage caused by training and running these tools, use of material owned by others, the impact of synthetic content on disinformation, potential impacts on labour, and the impact on low-paid marginalised workers who help to train out some of the worst content in an attempt to make the models safe.